If you’re interested in security and privacy, and you’re also explicitly using “artificial intelligence” (AI) chatbots, we want to raise some concerns we have regarding their use.

As new technologies, and considering that they are produced from a capitalist center, we know that the only thing that matters to the large corporations developing them is maximizing their profits: if that implies taking care of users’ privacy and security, they will do so. But if the competition is about who can launch a new version of a new technology with more functionality faster, we can run into problems. And that is precisely the situation we are in.

We are not going to cover the fact that search engines like Google 1 or messaging apps like WhatsApp are explicitly incorporating this for users. Nor do we include the fact that now everything “is” or has AI: scripts, household appliances, prosthetics, websites—everything comes with AI. Here we are going to focus on the case when you explicitly open a chatbot to obtain results: whether for work, health, emotional matters, or for fun.

From everyday use to systematic surveillance

The use of these technologies is widespread and has deeply penetrated connected society (let’s not forget the digital divides), so much so that it has displaced search engines as a way to stay informed, solve problems, and deepen knowledge. Some statistics indicate that in 2023, 35% of people had already stopped using search engines, with constant growth, and that 70% of people surveyed by Consumer Reports stated that they had used this type of technology in some way in the last three months 2. In practice, this means that millions of people regularly use chatbots to which they entrust personal, work-related, emotional, and political queries, handing over large amounts of information without there yet being a clear and shared understanding of how these data are protected, stored, analyzed, or reused.

So it is interesting to see that everything you write and every file you upload will be stored, analyzed, and used by these platforms to improve their businesses: whether to “retrain the models,” to “profile you” in order to sell you advertising, or to sell you the very thing you were just talking about with your friends. Even to know your political leanings and offer you the discourse you want to hear, thus manipulating political elections 3.

Supposedly not all companies do this in the same way; some seem to find it beneficial to present themselves as concerned about their users’ privacy, such as when Apple boasted about not collecting as much metadata as Facebook 4. Amanda Caswell has analyzed the privacy of several chatbots in her article “Privacy comparison of ChatGPT, Gemini, Perplexity, and Claude,” which we recommend reading.

We also know that large corporations use this Big Data 5 in collaboration with states to carry out “mass surveillance” of the entire population—something Shoshana Zuboff has called “surveillance capitalism” 7.

Your data, chatbots, and their risks

On the other hand, it’s not that we want to promote the use of this highly controversial technology that has been released upon humanity without any kind of control, but we know that to a greater or lesser extent, “all” of us end up explicitly using them in some way (not counting all the ways we use them without knowing it, of course). We also know that they produce very good results, for example by analyzing medical outcomes to find problems when there are millions of options to check. We neither oppose all uses, nor do we promote total use as some people seem to be doing.

All of this has to do with people’s privacy, but there are also elements that involve security problems 8. For example:

What happens when these platforms are breached and someone extracts that “Big Data” about you? 9

What happens if someone downloads all their “prompts” and all your files from one of these companies? That attacker might obtain your personal information, your address, your health or emotional status, images of your children, work documents, etc., etc.

If you want to check this and you use one of these bots with your account—say, ChatGPT—you can go to your profile (bottom left), choose “Settings,” then “Data Controls” > “Export.” The platform will prepare and send you a compressed file with all your conversations and all the files you uploaded. If you make medium or advanced use of it, you’ll be surprised by the amount of information they have about you—and that is only from the interface between the bot and you; it does not mean that it’s all they have about you.

Usage strategies, privacy by design, and anonymization

So if you still plan to use them, we recommend adding a section to your personal threat model, or to your organization’s digital protection strategy. How? We can’t answer that directly: it will depend on the type of use and the depth at which you are using these technologies, the type and size of your organization, and other factors.

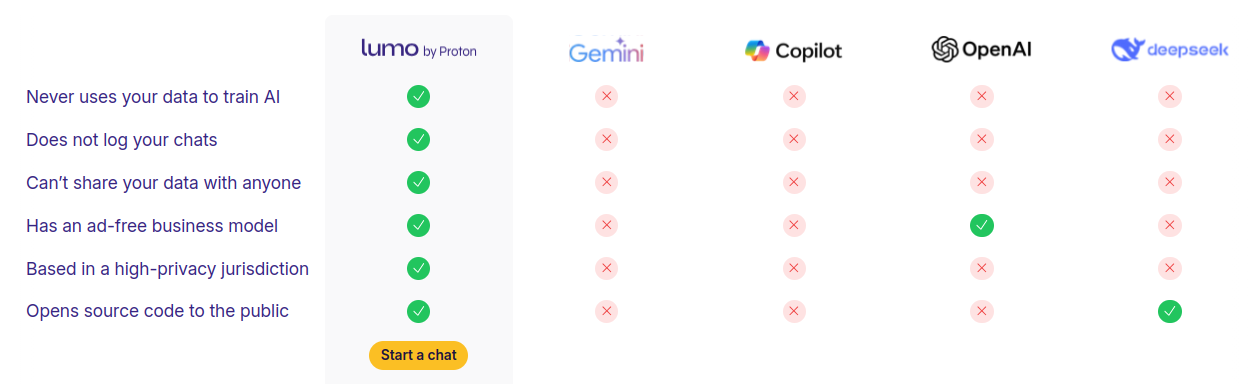

Along these lines, we can recommend two platforms that focus on promoting users’ privacy:

Duck.ai (by DuckDuckGo) – allows you to use several well-known LLM models in a self-hosted installation, without even the possibility of identifying yourself.

LUMO (by the Proton Foundation) – with certain usage limits and optional identification.

And here we reproduce a comparison provided precisely by the Proton Foundation:

If you still decide that you want to keep using the well-known, famous, and corporate ones directly, there are several cases in which (at the time of writing this article) it is not necessary to identify yourself:

This is a bit more inconvenient, because several of these will limit you and repeatedly bother you to identify yourself, and sometimes the results will not be as perfect as you want, since the “agents” will know less about you. Nor is it a guarantee that they won’t identify you anyway and include you in their Big Data, but what does happen is that all your “prompts”—everything you could download from your account—will not be available as historical material in your profile. Your footprint will be smaller, and the association with your real identity will also be smaller. If you want to go further in terms of protection, you can try using some of these with the Navegador Tor. It won’t be easy, since they put up many barriers to its use, but you can try.

So, in conclusion, we can tell you this: if you are going to use this very novel and controversial technology, do it without identifying yourself. We hope that in the future we will be able to rely on cutting-edge technology, but with “privacy and security by design.”

Featured image: Remix of Stadio Alicante, licensed under CC BY 2.0.

Notas:

- . By the way, if you already hate that Google gives you results as if it were a bot, you can do two things: i) use another search engine like DuckDuckGo or SearX (list of many instances), or ii) use this cleaner search: https://udm14.com/

- See report: https://explodingtopics.com/blog/chatbot-statistics

- As an example of the latter, we can cite the Cambridge Analytica case, among others

- See the metadata collection scheme of iMessage vs. WhatsApp and others in our article about Signal.

- A term that has gone out of fashion, but not out of relevance. Read more at: https://es.wikipedia.org/wiki/Macrodatos

- https://es.wikipedia.org/wiki/Capitalismo_de_vigilancia [/note[ and which has been denounced for decades, with the first strongly documented proof emerging from Edward Snowden’s leaks 6Access Snowden’s biography and the details of his case at: https://es.wikipedia.org/wiki/Edward_Snowden

- If you’re a bit of a nerd and want to dig deeper into the security issues of these technologies, we recommend this video by “Chema Alonso” on the topic

- A simple search is enough to verify how many times this has already happened.

Worrisome regulation on disinformation in times of COVID19

Worrisome regulation on disinformation in times of COVID19